Why Cloud Certifications Will Supercharge Your Career in 2025

The rise of cloud computing is reshaping many industries. As businesses increasingly migrate to the cloud, the need for skilled professionals has never been higher. By 2025, the cloud market will reach over $800 billion. Major players like AWS, Azure, and Google Cloud dominate the scene, with AWS holding nearly 32% of the market share.

The Skills Gap in Cloud Computing

Despite the booming demand, there’s a significant skills shortage in cloud computing. A report from IBM states that nearly 120 million workers will need reskilling in the coming years. This gap highlights an urgent need for qualified professionals to manage cloud environments, making cloud certifications essential for job seekers.

Why Certifications Matter: Validation and Competitive Advantage

Cloud certifications serve as proof that you possess the necessary skills and knowledge. They provide a competitive edge in a crowded job market, signaling to employers that you are committed to your professional development.

Top Cloud Certifications to Pursue in 2025

AWS Certifications: Architecting, Operations, and Security

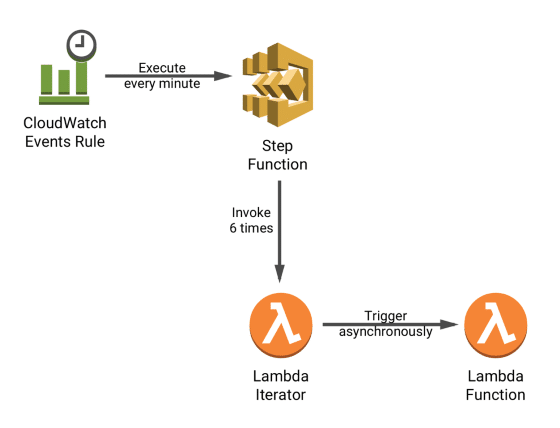

AWS offers a range of certifications, including:

- AWS Certified Solutions Architect: Ideal for those involved in designing distributed systems.

- AWS Certified Developer: Suited for developers who build applications on AWS.

- AWS Certified Security Specialty: Focused on security aspects.

Companies like Netflix and Airbnb actively look for AWS-certified professionals for various roles.

Microsoft Azure Certifications: Fundamentals, Administration, and Development

Microsoft Azure also presents many certification paths:

- Microsoft Certified: Azure Fundamentals: Great for beginners.

- Microsoft Certified: Azure Administrator Associate: For those managing Azure solutions.

- Microsoft Certified: Azure Developer Associate: Tailored for developers.

Organizations such as Adobe and LinkedIn frequently hire candidates with Azure certifications.

Google Cloud Certifications: Associate, Professional, and Expert Levels

Google Cloud continues to expand its certification offerings:

- Google Cloud Associate Cloud Engineer: A starting point for cloud roles.

- Google Cloud Professional Cloud Architect: Advanced certification for cloud architects.

- Google Cloud Professional Data Engineer: For those focused on data engineering.

Companies like Spotify and PayPal prioritize Google Cloud certifications in their hiring processes.

How Cloud Certifications Boost Your Earning Potential

Salary Data: Comparing Certified vs. Non-Certified Professionals

Professionals with cloud certifications can earn significantly more. According to Glassdoor:

- AWS Certified professionals average around $120,000 per year.

- Azure Certified individuals earn about $115,000 on average.

- Google Cloud Certified professionals can make around $130,000 annually.

Career Advancement Opportunities: Climbing the Corporate Ladder

Certifications not only increase earning potential but also open doors. Many employers favor certified candidates for promotions. For example, a cloud architect role may become accessible after obtaining relevant certifications.

Negotiating Higher Salaries: Leverage Certification as a Bargaining Chip

When discussing salary, use your certifications as a negotiation tool. Employers often value certifications, which can give you leverage when requesting a raise or better compensation.

Choosing the Right Certification Based on Your Career Goals

Aligning Certifications with Your Career Aspirations

To choose the right certification, consider where you want to go. If you aim to be a cloud architect, an AWS Solutions Architect certification may be beneficial.

Assessing Your Current Skillset and Identifying Knowledge Gaps

Take an honest inventory of your skills. Identify areas you need to improve, whether it’s cloud security or deployment.

Creating a Personalized Certification Roadmap

Map out a structured learning plan. Set timelines for studying and taking exams. This organization can keep you on track.

Preparing for and Passing Your Cloud Certification Exam

Effective Study Strategies: Maximizing Your Learning Efficiency

Adopt practical study techniques. Create a study schedule, use flashcards, and join study groups to enhance learning.

Utilizing Practice Exams and Resources: Sharpening Your Skills

Invest in practice exams and online resources. Platforms like A Cloud Guru and Udemy offer valuable preparation materials.

Managing Exam Anxiety and Stress: Maintaining a Positive Mindset

Before the exam, practice relaxation techniques such as deep breathing. Stay positive and visualize your success.

Beyond Certification: Building a Successful Cloud Career

Networking and Community Engagement: Connecting with Industry Professionals

Networking plays a vital role in career growth. Attend cloud conferences and engage on professional platforms like LinkedIn.

Continuous Learning and Skill Development: Staying Ahead of the Curve

The cloud landscape is always changing. Continue learning new technologies and practices to stay relevant.

Building a Strong Portfolio and Demonstrating Your Expertise

Showcase your skills through personal projects. Build a portfolio that demonstrates your cloud expertise.

Conclusion: Investing in Your Cloud Future

Cloud certifications provide immense value. They validate your skills, enhance your earning potential, and open new career paths.

Start your cloud certification journey today. Take the first step toward advancing your career and securing a place in the thriving cloud marketplace.